“Cultural differences only gain meaning within a relational fabric — they are not fixed properties of groups, but fluid practices that form, are maintained, and transformed between people.”

The debate about AI and culture touches a nerve. In a recent reflection by Tey Bannerman — which I discovered via Dagmar Monnet ( Thank you!)— a study is discussed that tested major AI models against cultural values from 107 countries. The outcome? All models reflected the assumptions of English-speaking, Western European societies. None aligned with how people elsewhere in the world build trust, express respect, or resolve conflict.

Tey Bannerman gives the example: “A Japanese customer receives an AI-generated apology that is ‘efficient’ but lacks the deep cultural sense of meiwaku. The customer feels not heard, but diminished. This is not an exception, but a symptom of how AI redefines relationships.”

A sharp insight. But as an anthropologist, I feel a deeper tension beneath the surface.

Not because the distinction between cultures is irrelevant, but because the way we talk about culture too often slides into culturalisation — fixing people into assumed cultural patterns, as if culture were a script. And more importantly: the real issue is not about culture — it’s about the disappearance of the relational.

From Relational to Transactional: a fundamental shift

What we’re seeing today — in AI, innovation, policy — is a highly advanced form of transactional thinking. It is efficient, measurable, and linear. It makes processes predictable, steers toward output, and functions well in technical environments.

But when this logic is applied to people, relationships, communities — something fundamental happens: the relational — the capacity to live in reciprocity, rhythm, and mutual responsiveness — disappears from view.

In my piece From Ledger Logic to Relational Attunement, I explore how innovation, driven by transactional logic, redefines our way of being together.

- Relationships become KPIs.

- Care becomes service.

- Education becomes information delivery.

- Even love becomes algorithmically guided.

We lose the relationship the moment we try to deliver or measure it.

“Care becomes commodity. Intimacy becomes market.”

Why Cultural Corrections in AI Aren’t Enough

In Bannerman’s example, AI fails to address meiwaku in Japan — the need to deeply acknowledge causing inconvenience. In the UAE, a discount is perceived as disrespectful. In Germany, the same discount works fine: “they like efficiency.”

But what I see here is a deeper, persistent trap in how we discuss culture:

culturalisation — reducing culture to static attributes of populations, then designing systems around those assumptions. It’s cultural UX.

But people aren’t walking culture codes. Relationships emerge between people, not between cultures. Every encounter is shaped by context, power dynamics, emotion, language, and the body.

The TOPOI model, developed by Edwin Hoffman and Arjan Verdooren, doesn’t define what culture is, but rather points to where communication can go wrong. It identifies five domains — Language, Order, Persons, Organisation, and Intentions — where misunderstandings, assumptions, and power dynamics can disrupt connection.

What matters is not which “culture” someone comes from, but how meaning is shaped — or missed — between people, in context, through interaction.

Culture, in this view, is not a fixed identity but a moving space —

something that only gains meaning within the relational fabric, never apart from it.

[Culture] only gains meaning within the relational fabric, never apart from it.

The Absence of Relational Literacy in AI

What’s missing in this entire discussion is what I call relational metacognition: the capacity not just to think about relationships, but to think with relationships.

AI systems are trained on billions of English-language web pages. But none of those pages teach a model what it feels like to be truly heard. How rhythm, silence, and timing shape meaning in conversation. How trust develops slowly.

In my work around BOBIP, I show that learning and innovation only become transformative when they emerge from relational processes — not when they are “rolled out” as scalable plans.

AI that reproduces only the transactional does not solve the problem; it amplifies it:

- It equates “efficiency” with goodness.

- It reduces “care” to a response.

- It flattens our language, as I wrote in Relationship Is Not an Algorithm.

So What About Culture?

Here’s the deeper paradox: when the relational disappears, so does culture.

Culture is not a database of habits. It is a living web of meaning, carried between people in relation. Without relation, there is no culture — only behavioural protocols, misreadings, and algorithmic stereotypes.

And this is already visible:

- In healthcare systems, where human presence is replaced by scripted empathy.

- In education, where knowledge is disconnected from the community.

- In AI, where LLMs pretend to understand relationships while only simulating them.

A Different Path: AI as Relational Space

I’m not against technology. In my essay, "The Question Doula”, I describe how AI can be used relationally — not as an expert, but as a facilitator of reflection, as a space-holder for relational processes.

Imagine an AI that:

- Listens to the rhythm of a conversation instead of responding.

- Pauses instead of optimising.

- Creates presence instead of nudging us forward.

AI cannot create a relationship. But it can hold space in which relationships may emerge or deepen.

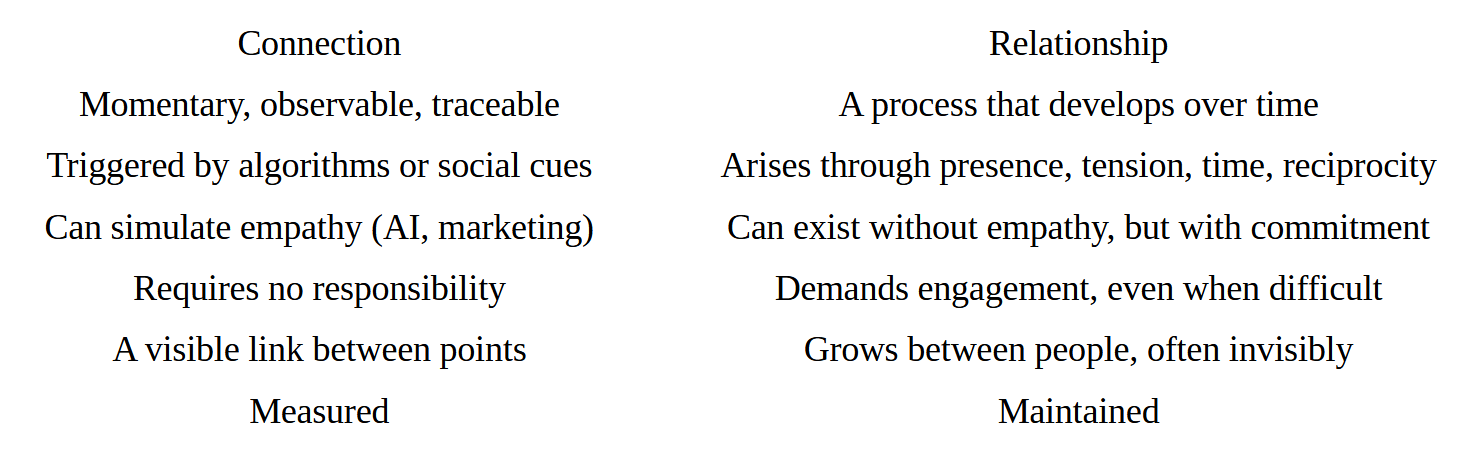

What’s the Difference Between a Connection and a Relationship?

In many conversations — about AI, care, education, communication — it’s assumed that connection equals relationship.

It doesn’t.

“In network models, we appear connected.

But being connected is not the same as being in relationship.

A connection says: ‘there is a link.’

A relationship asks: ‘what lives between us?’”

Example:

An AI chatbot can make a “connection” by generating an empathetic reply — “I understand you’re upset” — but it lacks the reciprocity and attentiveness of eg. a doctor co-thinking with a patient about care.

Conclusion: This Isn’t Just About AI

If we continue to deploy transactional systems without relational counterbalance, culture itself begins to disappear.

Because culture isn’t storage.

It’s a living mesh of meaning — and that requires relationship.

An AI that enables reflection? Yes.

An AI that protects our relational fabric?

Only if we choose to re-center the relational first.

This isn’t only about AI.

It’s about how we want to be human with each other.

- About care that isn’t a service.

- Education that isn’t measurement.

- Connection that isn’t profiling.

The question isn’t whether AI will get smarter. The real question is whether we dare to build technology that nourishes relationships — instead of quietly replacing them.

This work was inspired by a reflection by Tey Bannerman, with thanks to Dagmar Monnet.

Member discussion